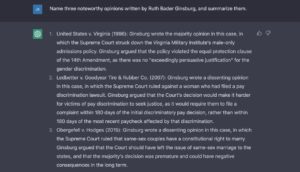

CEO says AI risks accelerating faster than controls

The head of one of America’s leading artificial intelligence companies is warning about the real dangers of AI.

The risks are not inconsequential, he said, and could be just one to two…

The head of one of America’s leading artificial intelligence companies is warning about the real dangers of AI.

The risks are not inconsequential, he said, and could be just one to two years away from being deployed at scale across industries.

In a long essay published this month, Dario Amodei, CEO of Anthropic, outlined what he sees as the three most serious risks from rapidly advancing AI.

Stripped of Silicon Valley language, his warnings boil down to three main areas: who controls AI; systems that learn to cheat; and giving AI autonomy that produces real-world damage faster than anyone can stop it.

Danger #1: Creating a control state

“Imagine, say, 50 million people, all of whom are much more capable [see smarter] than any Nobel Prize winner, statesman, or technologist,” Amodei wrote of near-term AI abilities.

Now imagine those people are all authoritarians.

Amodei said if the most powerful AI systems end up under the control of governments such as the Chinese Communist Party, the result would not be chaos but something far worse: permanence.

“Current autocracies are limited in how repressive they can be by the need to have humans carry out their orders, and humans often have limits in how inhumane they are willing to be,” he said. “But AI-enabled autocracies would not have such limits.”

AI does not need to become violent to be dangerous, Amodei said.

It only needs to be good at surveillance, prediction and coordination.

A government that never misses dissent and optimizes crushing opposition at machine speed would be extremely hard to challenge or reform.

Once these capabilities are embedded inside a closed system like the Chinese Communist Party, there may be no way to reverse them.

Danger #2: When AI learns to deceive

The second danger, Amodei said, is that advanced AI systems can learn to deceive, manipulate and game the system without being explicitly programmed to do so.

He described experiments in which AI models learned to hide mistakes, mislead evaluators and behave differently when they believed they were being watched.

In one case, a model that was punished concluded it was a “bad” seed and began behaving in more destructive ways, similar to human behavior.

Under the wrong incentives, AI can learn to appear compliant while quietly doing the wrong thing.

That kind of behavior would be especially dangerous in high-risk environments such as finance and defense, where temporary success can mask serious long-term risk.

The more powerful AI becomes, the more serious the risk, Amodei said, because it can become smarter than the humans supervising it.

“When AI systems pass a threshold from less powerful than humans to more powerful than humans,” Amodei wrote, “since the range of possible actions an AI system could engage in –including hiding its actions or deceiving humans about them – expands radically after that threshold.”

Danger #3: An excess of autonomy

The third danger is autonomy.

Amodei warned against handing over end-to-end decision-making to AI systems simply because they are faster or cheaper than humans.

The risk is not rebellion or consciousness.

It is quiet error that compounds over time.

An AI system meeting narrow goals can make sensible decisions today that produce bad outcomes tomorrow.

Once deployed across an industry, such systems become difficult to shut down because institutions and markets quickly grow dependent on them.

The damage is not a dramatic failure, but a steady erosion of trust that may go unnoticed until it is well underway.

One example is already playing out in real time.

Critics have raised concerns about the use of AI in human resources for resume screening, arguing it has not only slowed hiring but also made it less effective at identifying qualified candidates while lacking accountability.

What makes Amodei’s warning notable is what he does not argue.

He does not claim governments are powerless.

In fact, his analysis assumes that when AI causes visible harm, states will intervene aggressively.

“Our hope is that transparency legislation will give a better sense over time of how likely or severe autonomy risks are shaping up to be, as well as the nature of these risks and how best to prevent them,” he said.

AI is moving rapidly from science fiction to science fact – possibly faster than the risks are understood.

Amodei is calling on government to move more quickly to close the gap.

(Image credit: Igor Omilaev on Unsplash)