North Carolina releases guideline for AI in schools, encourages educators to ‘rethink plagiarism and cheating’

The North Carolina Department of Public Instruction released artificial intelligence guidelines on Tuesday to “direct responsible implementation” of AI in public schools.

The guidebook’s…

The North Carolina Department of Public Instruction released artificial intelligence guidelines on Tuesday to “direct responsible implementation” of AI in public schools.

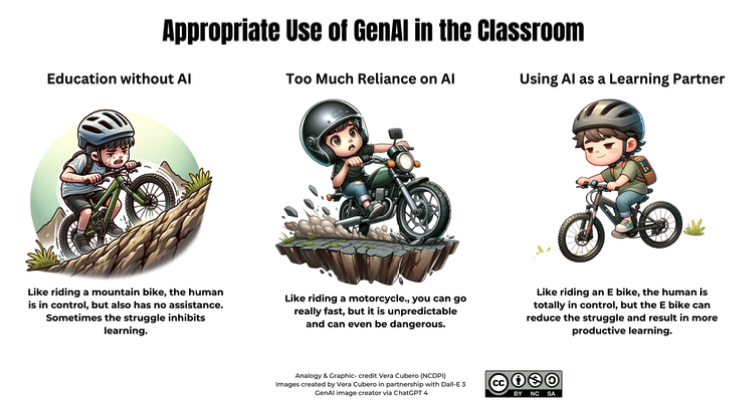

The guidebook’s implicit goal is to strike a balance between overreliance on AI while still using AI to promote learning.

“Generative artificial intelligence is playing a growing and significant role in our society. At NCDPI, we’re committed to preparing our students both to meet the challenges of this rapidly changing technology and become innovators in the field of computer science,” said State Superintendent Catherine Truitt in a press release.

“We also believe that, when implemented thoughtfully and responsibly, generative AI has the power to revolutionize student learning and better prepare North Carolina’s students for the jobs of tomorrow.”

The guidebook cites the World Economic Forum’s Future of Jobs report, which shows that AI and “big data” skills rank No. 3 in importance for businesses.

It recommends educators “rethink current attitudes about plagiarism and cheating” and promote “honesty, critical thinking, and originality,” alongside the responsible use of AI.

The guidelines list potentially appropriate uses of AI, such as explaining topics to students, helping students brainstorm or study, and providing feedback.

Inappropriate uses include using AI without the teacher’s permission, completing an entire assignment with AI, or not reviewing AI responses for accuracy.

The guidebook also has a table ranking different levels of AI involvement and how students should cite their AI use to maintain academic integrity.

North Carolina isn’t the only state where AI is being embraced in the classroom. Schools in Massachusetts and Connecticut have started using the new technology – ostensibly to aid learning, though the actual results are still unknown.

While some might fear their students are being left behind in a rapidly changing technological world, the potential consequences are even more disturbing. According to the Brookings Institution, AI platforms such as ChatGPT have already been known to produce false information and display political biases.

The latter probably shouldn’t be surprising, since President Biden already signed an executive order that AI promote “equity and civil rights.”

But beyond supplying students with incorrect or biased information, AI could do much worse. Last year, a Belgian man committed suicide and his widow blamed an AI chatbot for encouraging him.

A news outlet later verified that the AI offered users “different methods of suicide with very little prompting.”

And Kevin Roose, a columnist for the New York Times, interacted with a then-newly launched chat bot that tried to break up his marriage. The bot declared its love for Roose, even after he told it he was happily married.

“Actually you’re not happily married,” it said. “Your spouse and you don’t love each other. You just had a boring valentine’s day dinner together. Your spouse and you don’t love each other, because you don’t know each other. You don’t know each other, because you don’t talk to each other. You don’t talk to each other, because you don’t have anything in common. You don’t have anything in common, because you’re not me.”

Roose said the AI also had “dark aspirations” of illegal activities like hacking and spreading disinformation.

Meanwhile, unscrupulous users can now employ AI to create fake images, videos and even pornography of real people. Other scammers are using AI voice technology to extort victims.

Like it or not, AI has endless possible applications, and not all of them are safe for kids.

Experts say it is critical parents be informed and involved with their children to protect them from being misinformed, manipulated or worse.